I confess I was unaware of this facility within OSX and hoped to find something within Photos.app or the app store as I can’t find a way of transferring the classification results into metadata tags which would make it a bit easier to find images in my library.

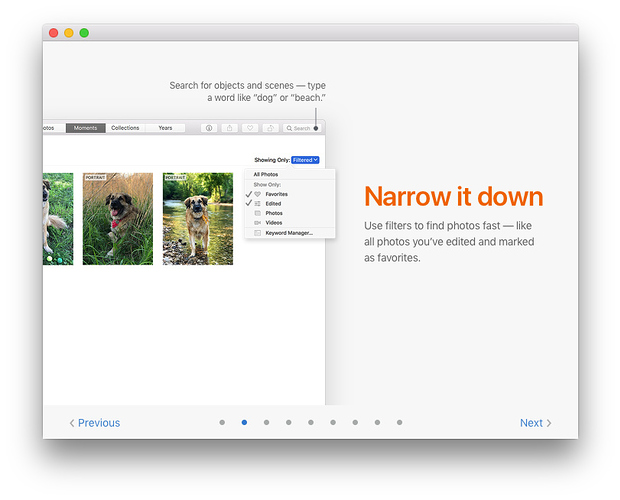

You can do this in Photos.app, just type the object or scene you are looking for. From Photos.app Quick Tour:

Though, I think adding a node to “Write Classifications as Finder tags” would be useful to be able to use the classifications elsewhere.

Thanks gingerbeardman that’s also useful to know but

- none of these are discoverable inside the image metadata

- it’s impossible to know what keywords are contained in each album

- there’s no way of knowing what keywords are in use, apart from autocompletion

- the words in the invisible index must be a subset of the words derivable from CoreML analysis

For example retobench identified an image containing baskets, but photos wouldn’t allow this search and suggested or perhaps forced the word to become basketball.

Actually what you suggest is another good idea to apply to all files, not just photos.

The ML Model that Retrobatch uses is probably different than the one MacOS ships with. I’ve never gone digging through Photos to see what it uses. So that’s probably why RB found baskets, but Photos didn’t.

Assigning classifications to Finder tags would be pretty cool- I’ll consider that a feature request.

-gus

The ML Model that Retrobatch uses is probably different than the one MacOS ships with.

Really? Or did you mean Photos.app ? If RB have their own image recognition tech they should be selling this to apple

Or maybe RB is just ahead in implementing this API? Guess we’ll find out soon enough at WWDC.

CoreML is a group of developer APIs that we can use to load up different models. Models are created by training sets of images. Then those models can be loaded up using CoreML, and we pass images through those to get different results.

So Retrobatch and Photos.app both use CoreML. But they used different models to do their classification.

Retrobatch can also let you select new models. You can find a handful of them from here: https://coreml.store . Retrobatch ships with the MobileNet model which is available for download from that site.

-gus

Gus,

My son-in-law is building a plane in his garage. He has a rasberrypi cam setup to start snaping photos after the lights come on. We need away to sort the images where people are not in the picture. I tried RetroBatch with a few different ML .mlmodel files from the cormel.store. None of them are good at picking out people.

Do you know the best way to train using sample images?

By the way love RetroBatch Pro!

I don’t know of a good way to do this today.

But- Apple introduced some awesome new developers last week which will be showing up in the next big release of macOS in the fall (macOS 10.14 Mojave). One of those new features is the ability to make our own models.

So (and this hasn’t been written yet, so it’s speculation) I plan on adding a feature to Retrobatch that would let you make your own model. Basically, you would have a folder of “good images” - ie images with just the plane in them, and then a folder of “bad images” with folks in there. Then you would run it through RB, and it would spit out a new model which you could then use.

That’s probably going to be the easiest way in the future. But for today- I don’t know any good way of training models today. (There is https://lobe.ai - but it’s in beta and I haven’t been able to get access to it quite yet)

Thanks, it looks like that lobe software would allow end users to create there own model. Would that exported model work with the current version of RetroBatch?

Thanks again.

From what the Lobe website says, yes.

You could download a CoreML model, and then put that in your ~/Library/Application Support/Retrobatch/MLModels/ folder, and Retrobatch would let you select it from the Classification node.

@deank maybe you can tweak the Pi setup to not take photos when it senses movement in the field of view?